The Psychology of 'Making A Murderer'

Roughly ten hours into Making a Murderer, a Netflix documentary about the murder trial of Steven Avery, his defense lawyer Dean Strang delivers the basic thesis of the show:

“The forces that caused that [the conviction of Brendan Dassey and Steven Avery]…I don’t think they are driven by malice, they’re just expressions of ordinary human failing. But the consequences are what are so sad and awful.”

Strang then goes on to elaborate on these “ordinary human failing[s]”:

“Most of what ails our criminal justice system lies in unwarranted certitude among police officers and prosecutors and defense lawyers and judges and jurors that they’re getting it right, that they simply are right. Just a tragic lack of humility of everyone who participates in our criminal justice system.”

Strang is making a psychological diagnosis. He is arguing that at the root of injustice is a cognitive error, an “unwarranted certitude” that our version is the truth, the whole truth, and nothing but the truth. In the Avery case, this certitude is most relevant when it comes to forensic evidence, as his lawyers (Dean Strang and Jerry Buting) argue that the police planted keys and blood to ensure a conviction. And then, after the evidence was discovered, Strang and Buting insist that forensic scientists working for the state distorted their analysis to fit the beliefs of the prosection. Because they needed to find the victim’s DNA on a bullet in Avery’s garage – that was the best way to connect him to the crime – the scientists bent protocol and procedure to make a positive match.

Regardless of how you feel about the details of the Avery case, or even about the narrative techniques of Making A Murderer, the documentary raises important questions about the limitations of forensics. As such, it’s a useful antidote to all those omniscient detectives in the CSI pantheon, solving crimes with threads of hair and fragments of fingerprints. In real life, the evidence is usually imperfect and incomplete. In real life, our judgments are marred by emotions, mental short-cuts and the desire to be right. What we see is through a glass darkly.

One of the scientists who has done the most to illuminate the potential flaws of forensic science is Itiel Dror, a cognitive psychologist at the University College of London. Consider an experiment conducted by Dror that featured five fingerprint experts with more than ten years of experience working in the field. Dror asked these experts to examine a set of prints from Brandon Mayfield, an American attorney who’d been falsely accused of being involved with the Madrid terror attacks. The experts were instructed to assess the accuracy of the FBI’s final analysis, which concluded that Mayfield's prints were not a match. (The failures of forensic science in the Mayfield case led to a searing 2009 report from the National Academy of Sciences. I wrote about Mayfield and forensics here.)

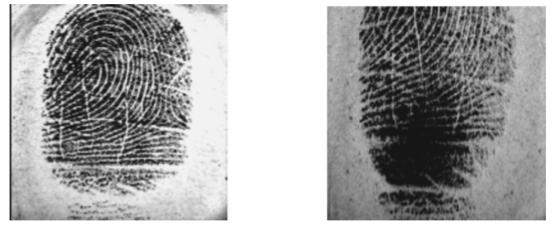

Dror was playing a trick. In reality, each set of prints was from one of the experts’ past cases, and had been successfully matched to a suspect. Nevertheless, Dror found that the new context – telling the forensic analysts that the prints came from the exonerated Mayfield - strongly influenced their judgment, as four out of five now concluded that there was insufficient evidence to link the prints. While Dror was careful to note that his data did “not necessarily indicate basic flaws” in the science of fingerprint identification – those ridges of skin remain a valid way to link suspects to a crime scene – he did question the reliability of forensic analysis, especially when the evidence gathered from the field is ambiguous. Here's an example of an ambiguous set of prints, which might give you a sense of just how difficult forensic analysis can be:

Similar results have emerged from other experiments. When Dror gave forensic analysts more typical stories about fingerprints they’d already reviewed, such as informing them that a suspect had already confessed, the new stories were able to get two-thirds of analysts to reverse their previous conclusions at least once. In an email, Dror noted that the FBI has replicated this basic finding, showing that in roughly 10 percent of cases examiners reverse their findings even when given the exact same prints. They are consistently inconsistent.

If the flaws of forensics were limited to fingerprints and other forms of evidence requiring visual interpretation, such as bite marks and hair samples, that would still be extremely worrying. (Fingerprints have been a crucial police tool since the French detective Alphonse Bertillon used a bloody print left behind on a pane of glass to secure a murder conviction in 1902.) But Dror and colleagues have shown that these same basic failings can even afflict the gold-standard of forensic evidence: DNA.

The experiment went like this: Dror and Greg Hampikian presented DNA evidence from a 2002 Georgia gang rape case to 17 professional DNA examiners working in an accredited government lab. Although the suspect in question (Kerry Robinson) had pleaded not guilty, the forensic analysts in the original case concluded that he could not be excluded based on the genetic data. This testimony, write the scientists, was “critical to the prosecution.”

But was it the best interpretation of the evidence? After all, the DNA gathered from the rape victim was part of a genetic mixture, containing samples from multiple individuals. In such instances, the genetics become increasingly complicated and unclear, making forensic analysts more likely to be swayed by their presumptions and prejudices. And because crime labs are typically part of a police department, these biases are almost always tilted in the direction of the prosecution. For instance, in the Avery case, the analyst who identified the victim’s DNA on a bullet fragment had been explicitly instructed by a detective to find evidence that the victim had been “in his house or his garage.”

To explore the impact of this potentially biasing information, Dror and Hampikian sent the DNA evidence from the Georgia gang rape case to additional examiners. The only difference was that these forensic scientists did the analysis blind - they weren’t told about the grisly crime, or the corroborating testimony, or the prior criminal history of the defendants. Of these 17 additional experts, only one concurred with the original conclusion. Twelve directly contradicted the finding presented during the trial – they said Robinson could be excluded - and four said the sample itself was insufficient.

These inconsistencies are not an indictment of DNA evidence. Genetic data remains, by far, the most reliable form of forensic proof. And yet, when the sample contains biological material from multiple individuals, or when it’s so degraded that it cannot be easily sequenced, or when low numbers of template molecules are amplified, the visual readout provided by the DNA processing software must be actively interpreted by the forensic scientists. They are no longer passive observers – they have become the instrument of analysis, forced to fill in the blanks and make sense of what they see. And that’s when things can go astray.

The errors of forensic analysts can have tragic consequences. In Convicting the Innocent, Brandon Garrett’s investigation of more than 150 wrongful convictions, he found that “in 61 percent of the trials where a forensic analyst testified for the prosecution, the analyst gave invalid testimony.” While these mistakes occurred most frequently with less reliable forms of forensic evidence, such as hair samples, 17 percent of cases involving DNA testing also featured misleading or incorrect evidence. “All of this invalid testimony had something in common,” Garret writes. “All of it made the forensic evidence seem like stronger evidence of guilt than it really was.”

So what can be done? In a recent article in the Journal of Applied Research in Memory and Cognition, Saul Kassin, Itiel Dror and Jeff Kukucka propose several simple ways to improve the reliability of forensic evidence. While their suggestions might seem obvious, they would represent a radical overhaul of typical forensic procedure. Here are the psychologists top five recommendations:

1) Forensic examiners should work in a linear fashion, analyzing the evidence (and documenting their analysis) before they compare it to the evidence taken from the target/suspect. If their initial analysis is later revised, the revisions should be documented and justified.

2) Whenever possible, forensic analysts should be shielded from potentially biasing contextual information from the police and prosecution. Here are the psychologists: “We recommend, as much as possible, that forensic examiners be isolated from undue influences such as direct contact with the investigating officer, the victims and their families, and other irrelevant information—such as whether the suspect had confessed. “

3) When attempting to match evidence from the field to that taken from a target/suspect, forensic analysts should be given multiple samples to test, and not just a single sample taken from the suspect. This recommendation is analogous to the eyewitness lineup, in which eyewitnesses are asked to identify a suspect among a pool of six other individuals. Previous research looking at the use of an "evidence lineup" with hair samples found that introducing additional samples reduced the false positive error rate from 30.4 percent to 3.8 percent.

4) When a second forensic examiner is asked to verify a judgment, the verification should be done blindly. The “verifier” should not be told about the initial conclusion or given the identity of the first examiner.

5) Forensic training should also include lessons in basic psychology relevant to forensic work. Examiners should be introduced to the principles of perception (the mind is not a camera), judgment and decision-making (we are vulnerable to a long list of biases and foibles) and social influence (it’s potent).

The good news is that change is occurring, albeit at a slow pace. Many major police forces – including the NYPD, SFPD, and FBI – have started introducing these psychological concepts to their forensic examiners. In addition, leading forensic organizations, such as the US National Commission on Forensic Science, have endorsed Dror’s work and recommendations.

But fixing the practice of forensics isn’t enough: Kassin, Dror and Kukucka also recommend changes to the way scientific evidence is treated in the courtroom. “We believe it is important that legal decision makers be educated with regard to the procedures by which forensic examiners reached their conclusions and the information that was available to them at that time,” they write. The psychologists also call for a reconsideration of the “harmless error doctrine,” which holds that trial errors can be tolerated provided they aren’t sufficient to reverse the guilty verdict. Kassin, Dror and Kukucka point out that this doctrine assumes that all evidence is analyzed independently. Unfortunately, such independence is often compromised, as a false confession or other erroneous “facts” can easily influence the forensic analysis. (This is a possible issue in the Avery case, as Brendan Dassey’s confession – which contains clearly false elements and was elicited using very troubling police techniques – might have tainted conclusions about the other evidence. I've written about the science of false confessions here.) And so error begets error; our beliefs become a kind of blindness.

It’s important to stress that, in most instances, these failures of forensics don't require intentionality. When Strang observes that injustice is not necessarily driven by malice, he's pointing out all the sly and subtle ways that the mind can trick itself, slouching towards deceit while convinced it's pursuing the truth. These failures are part of life, a basic feature of human nature, but when they occur in the courtroom the stakes are too great to ignore. One man’s slip can take away another man’s freedom.

Dror, Itiel E., David Charlton, and Ailsa E. Péron. "Contextual information renders experts vulnerable to making erroneous identifications." Forensic Science International 156.1 (2006): 74-78.

Ulery, Bradford T., et al. "Repeatability and reproducibility of decisions by latent fingerprint examiners." PloS one 7.3 (2012): e32800.

Dror, Itiel E., and Greg Hampikian. "Subjectivity and bias in forensic DNA mixture interpretation." Science & Justice 51.4 (2011): 204-208.

Kassin, Saul M., Itiel E. Dror, and Jeff Kukucka. "The forensic confirmation bias: Problems, perspectives, and proposed solutions." Journal of Applied Research in Memory and Cognition 2.1 (2013): 42-52.